By Michael-Patrick Moroney

Let’s begin with a scene.

It’s 2:14 a.m. in Tulsa. A woman sits cross-legged on her bed, scrolling through her phone in the dark. Her fingers hesitate over a text to an ex she knows she shouldn’t send. Instead, she taps open a chatbot and types, “I’m not okay.”

The response comes in less than a second: “That sounds really hard. I’m here with you. Want to talk about what happened?”

What might have once seemed dystopian - a machine offering emotional comfort - is now part of millions of daily interactions. And for many, it's more than just comfort. It's clarity. It's calm. It’s connection.

Hello to the soft revolution in emotional attention, led not by counselors, therapists, or call center agents, but by large language models, chatbots, and AI. These technologies are reimagining what it means to be "heard," especially for those who won't - or can't - seek it from another human.

The Therapist That Doesn't Blink

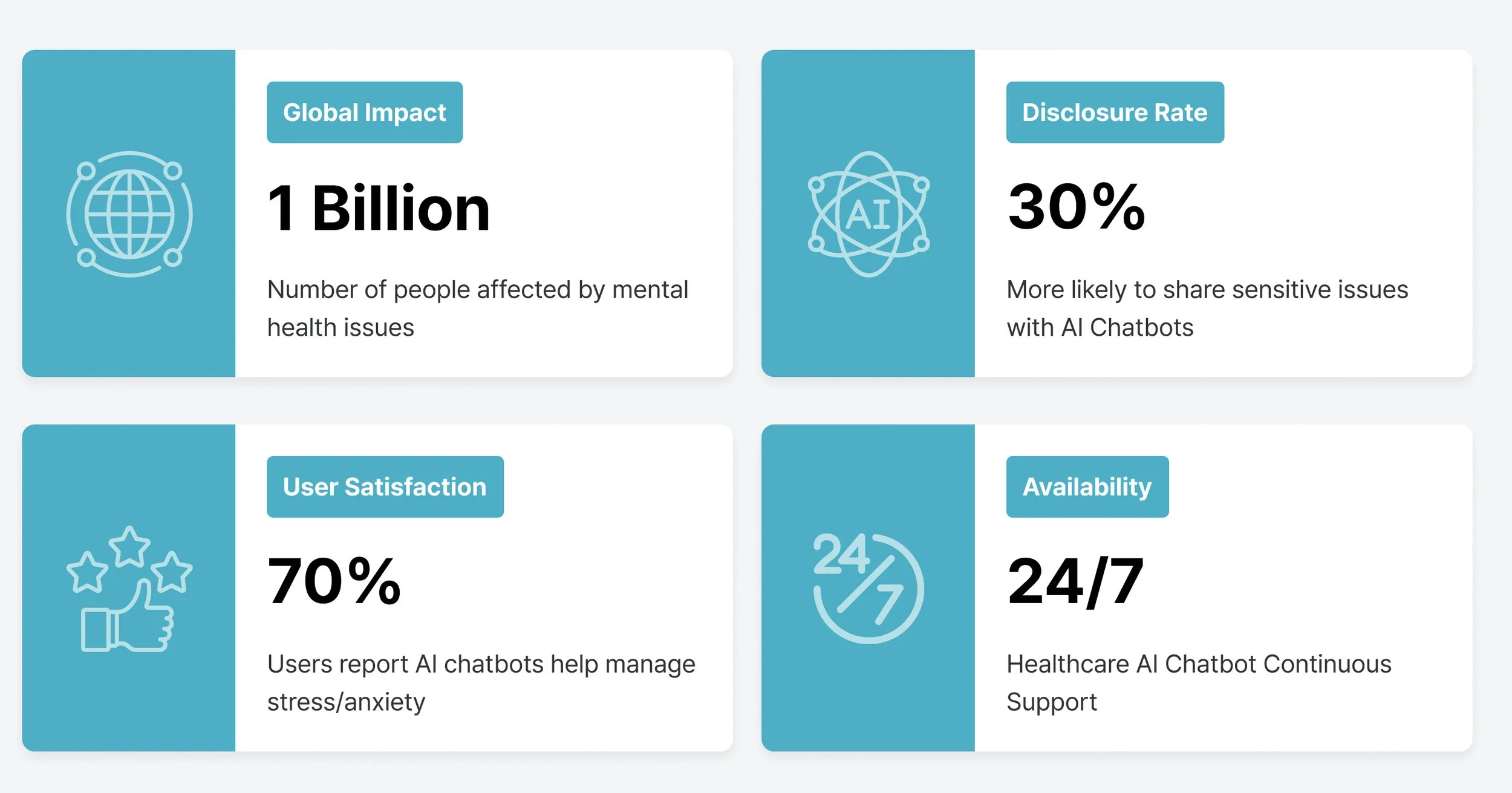

Source: Makebot, 2024

The work of AI in mental health support has grown rapidly - and stealthily. Woebot, Wysa, Replika, and Inflection's Pi are among several apps now functioning as emotional companions to millions. Yet, as compared to initial, rule-based bots, current offerings are fueled by generative AI, having been trained on vast corpuses of therapy transcripts, patient forums, and emotional support conversations. The outcome? An experience that is uncannily personal - almost comforting.

Kat Woods, a 35-year-old entrepreneur and former Silicon Valley startup lead, described her experience using ChatGPT for self-therapy. “It’s more qualified than any human therapist I’ve worked with,” she said. “It doesn’t forget things, it doesn’t judge, and it’s available at three in the morning when I’m spiraling.” Woods estimates she spent over $15,000 on traditional therapy before turning to AI. “This just works better for me.”

She is not alone. A study of consumers of generative AI chatbots for mental health treatment published in npj Mental Health Research described high engagement and beneficial outcomes, including better relationships and healing from loss and trauma. One of the participants testified, "It happened to be the perfect thing," referring to the AI chatbot as an "emotional sanctuary" that had provided "sensitive guidance" and a "joy of connection.".

Part of that transformation is the simple fact that to some, AI is safer than people. In the same research, respondents described AI chatbots as "emotional sanctuaries." One user said, "I was crying, and the bot just let me talk. It didn't push. It didn't try to fix me. It just responded."

The Clerk Who Doesn't Get Irritated

Consider something less poetic: submitting an insurance claim.

Anyone who has ever spent time on hold with an insurance bureaucracy or government agency knows that it feels as if you're talking into the void - or worse, into someone who'd like to be your worst nightmare. Customer service, for most of us, is one long negotiation of empathy fatigue.

It's here that AI's absence of emotion is a plus.

In 2025, Allstate Insurance began using OpenAI - powered agents to generate nearly all customer claim messages. Human representatives still reviewed the messages before they went out, but the AI created the first drafts - with a difference. It was not only conditioned on legal terminology and policy data, but also best practices for expressing empathy, reassurance, and patience.

The catch: The switch has radically improved customer interactions, says Allstate Chief Information Officer Zulfi Jeevanjee. The AI-generated emails are less jargon-filled and more sympathetic, overall improving communication between the insurance company and customers .

It's true, Zendesk's 2025 CX Trends Report verifies: 64% of shoppers say they're more likely to trust AI-powered agents that feel friendly and empathetic.

Empathy Without Ego

When judgment isn’t part of the conversation.

What's perhaps most surprising is how often AI outperforms humans in sounding human.

A 2023 study in JAMA Internal Medicine, comparing physician and ChatGPT responses to questions posed on the r/AskDocs subreddit, discovered that AI responses were assessed as more empathetic, more informative, and more helpful than physician responses when blind-evaluated by external raters. Specifically, ChatGPT responses were preferred to physician responses in 78.6% of evaluations .

It doesn't indicate that the bot is empathetic - it isn't. But users honestly don't care.

Dr. Adam Miner, a Stanford clinical psychologist with research interests in AI in medicine, sheds light: "If the affective tone sounds sincere-sounding and consistent, people respond. People's brains are computing that tone as kind—even when they know it's artificial."

In another study on empathic design in chatbots, users reported a greater willingness to share vulnerable information with AI than with human therapists. The reason? “It can’t judge me,” said one participant. “It doesn’t care if I’ve messed up my life.”

And because it can’t be hurt, insulted, or burned out, AI doesn’t retreat emotionally when the user becomes difficult, angry, or repetitive.

The Bureaucrat Who Always Calls You Back

The public sector is waking up and taking action. Governments around the world are eyeing AI as the vehicle to make citizen services more streamlined - especially in areas that have been mired in inefficiency and public distrust.

In the UK, the Government Digital Service recently tested a generative AI assistant to handle high-volume questions on GOV.UK. Although still in development, preliminary results show lower resolution time and higher user satisfaction, especially for those with low digital literacy.

In Japan, call center operators are using AI technology to help employees cope with "customer harassment" - including abusive customers. Even a telecom experimented with software that muted the voice of the caller prior to being heard by the operator.

In the US, cities like Los Angeles are piloting AI systems to sort through requests for citizen services, such as 311 complaints and requests for housing assistance.

What these projects share is not efficiency - but tone.

"People don't begrudge the automation so much as they begrudge being dismissed," said Mariel Santos, a civic tech consultant who advises several state governments. "If the AI politely, clearly, and patiently responds, that is better although it is a machine."

The Emotional Engineering of AI

Don’t forget - real people at the other end

Good grammar alone isn't enough to create a "polite" machine.

Companies training AI to provide emotional support must fine-tune models on human-curated data sets of therapy sessions, crisis hotline calls, and politely interacting with customers. They use reinforcement learning from human feedback (RLHF) to reward the AI when it is empathetic, de-escalating, or tactful.

They also use guardrails - hard-wired habits to keep from offering medical or legal advice, to steer in emergency circumstances ("Are you thinking of killing yourself?" produces a response with hotline information), and to clearly expose that the agent isn't human.

Even with all those safeguards, however, there are still ethical issues. What if someone grows psychologically attached to an AI buddy? What if the bot gives advice that, while empathetic, is atrociously wrong?

“We’re not building therapists,” said Tessa Jacob, head of safety at an AI mental health startup. “We’re building tools. And tools require oversight.”

The Future: Hybrid, Not Replacement

Even if it’s not real - it helps.

Most experts agree: AI won’t replace human empathy. But it may become its most reliable assistant.

Envision a therapy practice where an AI tracks mood over time, raises flags on patterns, and gives journaling prompts between sessions. Or a platform for government benefits that talks back to a citizen in plain language, only passing on to a human caseworker when it has to. Or a call center where the bot answers the first five minutes - and always says thank you.

These are not futures still to come. They're arriving now.

"AI is best used to unload emotional labor off our plates so we can be more human where it counts," said Dr. Zhao. "Let it do the 10 p.m. anxiety spiral, the insurance appeal template, the waitlist welcome call."

And although the idea of offloading your secrets to a string of probabilities would have seemed calculating once, the truth is more complicated.

Sometimes we just need to be heard. And the machine listens around the clock.