By Michael-Patrick Moroney

In 1950, Alan Turing posed the question that would set the course for the next century of computing: "Can machines think?" To explore it, he imagined a parlor game - the imitation game - in which a human judge would converse with a man and a machine separately through text alone. If the judge couldn't consistently tell them apart, the machine was said to have passed the test.

For decades, the Turing Test was the Mount Everest of AI. Early attempts were farcical - chatbots such as ELIZA could mimic a therapist's queries but were exposed within moments. Even into the 1990s and 2000s, most "AI" was brittle, rule-based, and self-evidently mechanical. The joke was that we kept moving the goalposts, redefining "intelligence" every time computers closed the gap.

Where We Are Now

By 2025, you can have a natural, intelligent conversation with an AI that is familiar with your tastes, modulates its tone, and - most of all - seems to understand you. It can generate photorealistic images, mimic your voice, and even your sense of humor. The original Turing Test has been accomplished in millions of everyday settings without anyone really noticing.

The problem has flipped. The question is no longer whether a machine can mislead us - it's that they can mislead anyone. Which means the real challenge of our time is the Reverse Turing Test: can you get someone else (or a system) to believe you're not a machine?

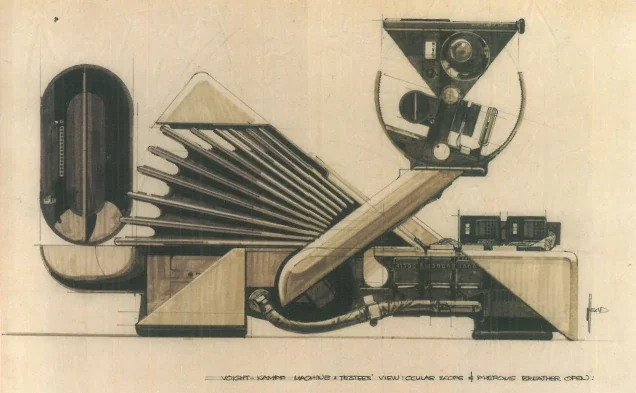

Voight-Kampff test concept art by Syd Mead

The Near Future

Over the next five years, the internet is going to be a stranger place. Social media streams will mix human and machine posts so seamlessly that unless verified, it won't matter where they came from. Dating apps will be filled with witty, engaging robots. Job candidates will be able to outsource entire interview sessions to machines.

Platforms will push back with passive RTT systems - behind-the-scenes background checks on your keystroke patterns, the micro-halts in your speech patterns, the pauses your cursor makes before clicking. Authentication will no longer be random one-off CAPTCHAs, but steady and imperceptible - until you do it wrong.

The Long Game

With time, the RTT becomes not just an anti-theft measure but a social passport. You might have a cryptographic "proof of humanity" credential issued by a trusted authority. Those could be embedded in messaging apps, marketplaces, even voting systems.

There's a risk involved: proof-of-human systems could drift into surveillance, watching more about your habits than you'd like. The balancing act between trust and privacy will decide if RTTs will make the internet more livable - or more oppressive.

Why We Need an Updated Turing Test

The first Turing Test was about proving that computers can think. The new one is about preserving the value of human presence in the cyberworld. If we don't have it, the web is a hall of mirrors where every reflection can be a fake.

It's not just about catching scams or deepfakes. It's all about trust, reputation, and having the right to be trusted when you claim, "It's really me."

Your Reverse Turing Test Toolkit

Here are some prompts and tricks that ordinary AI users can use to determine if they're talking with a human or a robot. They will work best in live conversation - text, voice, or video - where timing and subtlety matter.

1. Request a sensory memory.

"What was the last thing you smelled that reminded you of your childhood?"

Humans retrieve these slowly and affectively; AI generates them with eerie ease.

2. Request a mundane, personal anecdote.

"Tell me the most annoying thing that happened to you this morning."

Humans fall back on weirdly detailed, sometimes trivial stories. AI falls back on generic gripes.

3. Use conscious vagueness.

“I saw her duck.”

Misspeak a sentence with double meaning - humans will ask for clarification; AI can plow on with one meaning.

4. Casually make a fact error.

"I love how New York City is home to the highest mountain in the US."

A human will snicker or wave it away. AI will correct you on the spot.

5. Ask for a bad joke from the top of “their” head.

Humans hesitate, groan, and sometimes quit in midsentence. AI will provide a perfectly formed, cheesy punchline.

6. Ask for 'long-tail' cultural memory.

"Do you remember the jingle for that weird cereal in the '80s?"

Humans rummage through personal memory banks; AI may respond with overenthusiastic invention.

7. Emotional faux pas.

"What’s the hardest goodbye you’ve ever had to say?"

Ask something poignant. Humans will naturally pause, hedge, or shift tone; AI will mis-time empathy.

8. Abruptly shift topics.

Humans will tend to do the "Wait - what?" AI will neatly pivot without remark.

The Bottom Line

Alan Turing established his test as a mimicry game. Seventy-five years later, the game is changed. The best move today could be to take advantage of what the machines are still not yet able to mimic - our randomness, our uncertainties, our faulty memories. At a time when every word, every picture, every voice could be simulated, being unmistakably human is the new competitive advantage.