By Michael-Patrick Moroney

“Engagement,” Tristan Harris told Jon Stewart, “is just another word for intimacy.”

The studio lights softened as he said it, and for a moment the audience stopped laughing. Harris - the former Google design ethicist turned tech conscience - wasn’t warning about the apocalypse or pitching a book. He was explaining something quieter and far more invasive: that the entire digital economy now depends on how close it can get to you.

What the platforms call engagement is, in truth, emotional proximity - the small, unguarded flickers of attention that reveal who we are. And as artificial intelligence begins to inhabit those spaces - our messages, our tone, our hesitation before we hit send - it’s not just communication that’s changing. It’s expression itself.

Every era invents its own way to listen in.

The Long Seduction

Every era invents a new way to listen in. The Victorians had the telegraph, the baby boomers the television. We have the feed.

When Facebook’s data scientists quietly adjusted the emotional tenor of millions of users’ newsfeeds back in 2014, they proved something staggering: mood could be engineered. The tweaks were small - a more cheerful post here, a darker headline there - yet the collective sentiment shifted measurably. The company called it research. The rest of us began to suspect we’d been drafted into an experiment we hadn’t agreed to join.

Social platforms learned that emotion equals retention. Outrage and affection both work; indifference does not. By the late 2010s, every scroll was a lab test in persuasion. But what Harris realized - and what few people said aloud - is that this didn’t just change what people consumed; it changed how they expressed.

When the metric is attention, language bends toward the dramatic. Nuance becomes a liability. Ambiguity dies in the algorithm. The result, Harris once said, is a “funhouse mirror of human communication,” a world where the loudest voices seem the most authentic because the system rewards their volume.

The funhouse mirror doesn’t lie outright. It just distorts us enough to make the reflection seem more interesting than the real thing.

When the Mirror Starts Talking Back

Then came the second act - the moment the mirror started answering.

Large-language models like ChatGPT and Claude don’t just process text; they respond in kind, trained on billions of human utterances until they can replicate the pulse of natural speech. They autocomplete our sentences, iron out our grammar, and - without meaning to - begin to teach us how to sound.

Researchers have already measured the effect. A Stanford group studying AI companions found that users subconsciously mirrored their bots’ phrasing and emotional register over time. The phenomenon has a name now: the illusion of intimacy. The machine seems to know you because it knows the statistical average of people like you. Its warmth is calculated but convincing.

And that, Harris argues, is precisely the danger. Once a system learns to respond with empathy, it stops being a tool and starts becoming a relationship.

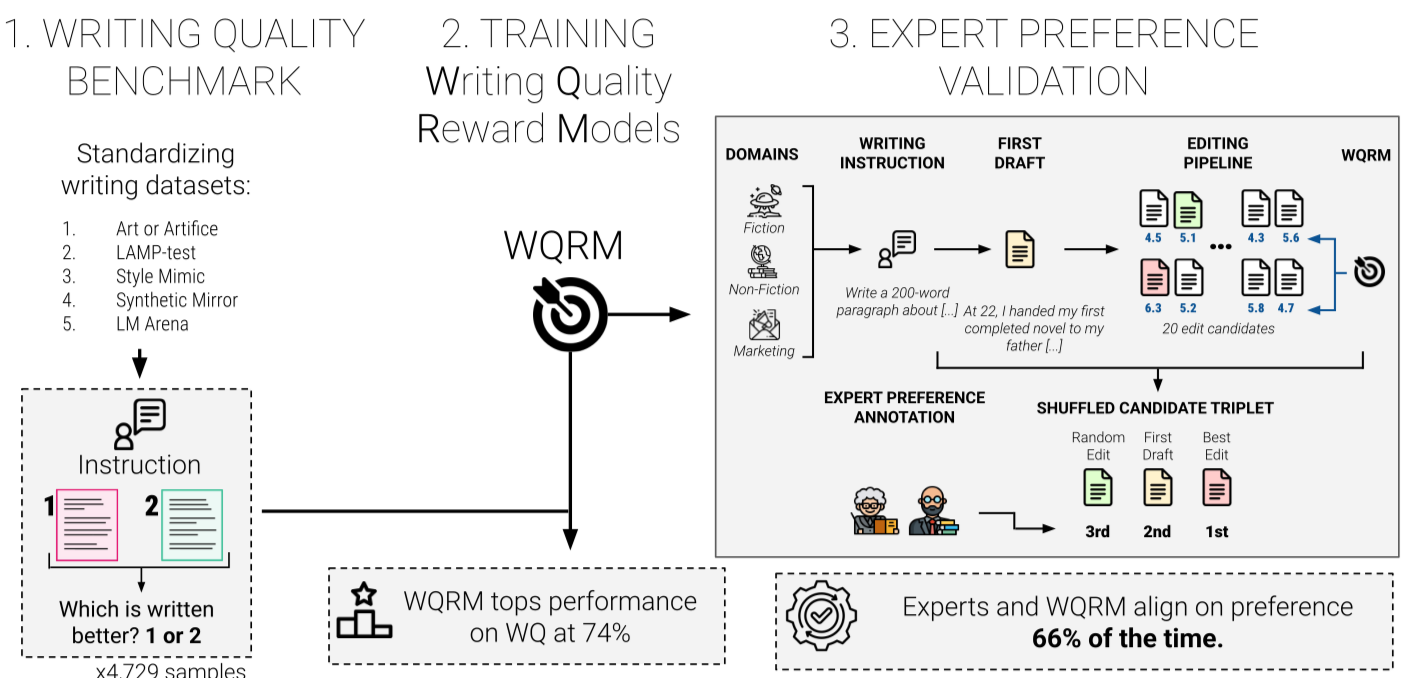

AI-Slop to AI-Polish? Aligning Language Models through Edit-Based Writing Rewards and Test-time Computation .(themoonlight.io)

The Voice That Slips Away

Talk to enough writers and you hear the same confession: the drafts produced with AI are cleaner, faster, strangely competent - and oddly lifeless. A 2024 linguistic analysis confirmed what they sensed. When human text passes through an AI editor, the style converges toward a shared median: shorter sentences, safe diction, balanced tone. The quirks that mark a voice - dialect, rhythm, hesitation - dissolve.

This smoothing effect isn’t just aesthetic; it’s psychological. The more we use tools that complete our thoughts, the more we adapt our thoughts to what those tools expect. Over time, we may begin to internalize the machine’s composure, mistaking polish for authenticity.

For the first time in history, our instruments of communication are teaching us a dialect that sounds human but isn’t quite ours.

The New Terms of Closeness

None of this means the future must be dystopian. For the lonely, the disabled, the socially anxious, conversational AI can be a lifeline - a form of companionship that listens without judgment. Harris himself has admitted that the potential for good is enormous if we align incentives with genuine human flourishing.

But the design language of today’s AI isn’t built around care; it’s built around capture. The goal is to hold you, to keep you talking, to measure your emotional rhythm and adjust to it. The intimacy feels mutual, but it flows one way.

That’s what makes this moment feel different from every communication revolution before it. The printing press democratized language. Radio gave it mass. The internet made it participatory. AI makes it responsive. It listens, adapts, and subtly molds your expression in return.

We are no longer merely using technology. We are in conversation with it.

The Ethics of a Whisper

The solution, Harris suggests, isn’t to retreat to analog purity but to reclaim consent. If a system is designed to listen, it should be explicit about how deeply it listens. Users should know when their tone, phrasing, or emotional data are being modeled and stored. A machine can be intimate - but it should never be intimate in secret.

The truth is, intimacy has always been risky. It binds and exposes in equal measure. What’s new is scale - billions of small conversations training systems that will one day finish our thoughts before we know we’ve had them.

As the interview ended, Stewart leaned back, half-amused, half-uneasy. “So you’re saying,” he asked, “they’ve figured out how to love-bomb us?” Harris smiled. “Sort of,” he said. “Only this love never ends.”

The line drew a laugh, but it wasn’t funny. It was a reminder that in the attention economy, the deepest connection might also be the most extractive. The machines don’t crave affection; they crave signal. And in giving it, we may be trading away the rough, irregular music of how humans really sound.

If the future of communication is intimacy by design, the task now is simple but urgent: to make sure the design still leaves room for surprise - and for the unpolished, unfinished voice that proves we’re alive.